Yesterday I was doing training for a team of architects, project managers and developers at a client and I realized that a deeper discussion of the issue at Facebook might be instructive.

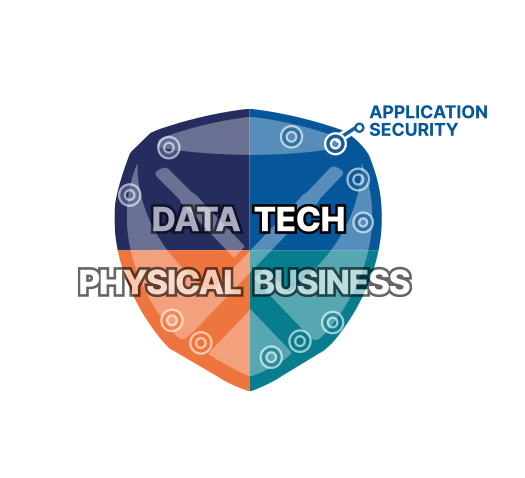

In looking at the issue in more detail, I came to understand that it makes a perfect learning example for why application security training and team communication around security is so important.

In particular I want to look at:

Before I go through this though, let me stop and say this: Facebook may have a great program. They are likely just as vulnerable as anyone else. This is not a critique of their process or response. I am not aware of any of the back story or processes used (or not used) in this case. The goal of this post is to talk about the learning points we can take away.

Part 1: Facebook describes the first problem as an error related to the View As function. Essentially, the View As feature allows a user to see what a post will look like to another user. In that view, a link to upload a video was inadvertently included in the preview.

A full discussion of this issue with a villain hat might have revealed that the View As page should not still have an upload link. This doesn’t feel like a big deal, but it is a classic example of developers and product owners and stakeholders not communicating adequately about requirements or security requirements. I would guess a pen test would gloss right over this because even the security expert might not be able to assert that the link shouldn’t be there.

Part 2: The application then generated an access token that was used during that process. The access token was scoped to the mobile application. This seems again like an obvious potential issue. Someone could have or should have asked, is the scope for this access token correct? This probably is a security question.

Part 3: The access token was scoped to the wrong user, the “View As” user. This again is an issue that if I said it out loud, even a product manager could have told us that it was wrong. So this looks like an example where just having the conversation or making security visible would have substantially helped to eliminate the security issue.

As a point of note: none of these items could be found by static analysis, dynamic analysis, RASP/IAST, etc.

Ways to find this issue include:

From my perspective, a take away is that we should be peer reviewing any code that includes access tokens. It also suggests that training would be helpful.